In future wars, will human soldiers be replaced by weapons that think for themselves?

Lots of remotely controlled systems are already on the battlefield. In 2012, I spoke with scientists, analysts — and the nation’s top military officer — about how remote engagement and autonomous systems might be changing the American way of war. Here is the CBS Radio documentary that resulted from those conversations.

When we humans go to war, our least favorite way is hand to hand, face to face.

“It speaks to human nature,” says Massachusetts Institute of Technology Professor Missy Cummings, a former Navy fighter pilot. “We don’t really like to kill, and if we are going to kill, we like to do it from far away.”

Over centuries that has led to creation of weapons that allowed us to separate ourselves from our adversaries — first by yards, then miles. Now, technology allows attacks half a world away.

Until a decade ago, most of the remote engagement capability was owned by the U. S. or Israel. Not anymore.

Unmanned platforms – in the air, on the ground, and on or under the water — are becoming less and less expensive. So are the sensors that help guide them. And nanotechnology is making them smaller.

Today, U. S. soldiers in Afghanistan launch throw-bots into the air by hand, and mini-helicopters deliver frontline supplies by remote control. Adding artificial intelligence to the mix, we are now seeing some platforms operating without even remote human control. An unmanned aircraft flown by an onboard computer recently refueled another unmanned plane – in the air – as it, too, flew completely on its own.

These tools of remote engagement are already changing modern battlefields. And some people worry we may not be giving enough thought to how much they’re going to change things.

Simon Ramo has been thinking about this sort of thing for a long time. 99 years old, he knows something about national security. Remember the defense firm TRW? He’s the R.

“A huge revolution in cost, in loss of lives, takes place,” says Ramo, “if you go to the partnership of man and machine — and let the robots do the dying.”

Such a partnership, he says, does more than save life and limb. It also saves the huge expense of maintaining a big military presence overseas.

Peter Singer of the Brookings Institution agrees that remote engagement allows modern military forces to “go out and blow things up, but not have to send people into harm’s way.”

But he says robot wars are much more complex than that.

“Every other previous revolution in war has been about a weapon that changed the how,” says Singer. “That is, a machine or system where it either went further, faster, or had a bigger boom.”

Robots, he says, fundamentally change who goes out to fight very human wars.

In 2012, Chas discussed the rise of drones on the battlefield, and potential dangers of autonomous weaponry, with then-Joint Chiefs Chair General Martin Dempsey.

“It doesn’t change the nature of war,” says General Martin Dempsey, chairman of the Joint Chiefs of Staff. “But it does in some ways affect the character of war.”

The nature of war, says Dempsey, is a contest of human will. The character, on the other hand: “What do you intend? How do you behave with it? And then what’s the outcome you produce?”

“This is not a system which we’ve just simply turned loose,” says the general. “It’s very precisely managed, and the decisions made are made by human beings, not by algorithms.”

What capability are those humans managing? Battlefield commanders say — most importantly: an ability to provide persistent surveillance and the intelligence that comes from it.

“When you have an aircraft that can fly over an evolving battlefield, and in an unblinking way observe the battlefield,” says Air Force Lieutenant General Frank Gorenc, “they have the ability to describe to manned aircraft that are coming in, that can provide the firepower, much more accurate data.”

Commanders whose unmanned systems roam on the ground or in and under water gain similar benefits. That’s why many say “don’t call them drones.” In military terminology, drones are dumbed-down vehicles capable of following only a predetermined path. In the air, pilots in smart planes used drones as targets. So while most people around the world have come to call them drones, the people operating them prefer the term unmanned systems.

Well, some of them. General Gorenc says even if there is no one in the driver’s seat, it takes a lot of humans to keep the systems working. “There’s hardly anything unmanned about it,” he says, “even in the most cursory of analysis. So it takes significant resources to do that mission.” A mission that is possible because as the vehicles have developed, so too have the sensors providing them an understanding of precisely where they are at any given time, and optics that have improved the images they collect and send back.

Besides loitering for hours or days over places commanders want to keep an eye on, what can these systems do? We will likely see more unmanned craft delivering supplies — meaning air crews or truck convoys will be put in less danger.

Dempsey says it is possible, too, that a wounded soldier could soon be bundled inside a remotely piloted aircraft for evacuation to a field hospital.

“Logistics resupply and casualty evac could certainly be a place where we could leverage technology and remote platforms,” he says.

And of course, as Georgetown University Professor Daniel Byman notes, some unmanned systems — most notably the Predator drone — can kill.

“It’s that persistent intelligence capability, to me,” says Byman, “that enables the targeting of individuals — where before you wouldn’t — in part because of the risk to the pilot, but also in part because you weren’t sure what else you might hit. And now you can be, not a hundred percent confident, but more confident than you were.”

There has been controversy about the two ways those drones deal death — by targeted or signature strikes.

“A targeted strike is based on a positive identification of a particular individual or particular group of individuals,” says Christopher Swift of the University of Virginia’s Center for National Security Law, “whether they’re moving in a convoy, or whether they’re at a fixed location, or whether they’re out on the battlefield.”

Signature strikes, on the other hand, use sensors to watch for trends of behavior that seem suspicious then launch an attack when it appears — to a computer algorithm — that the series of behaviors point to bad guys doing, or getting ready to do, bad things.

Signature strikes bring with them a greater risk of killing or wounding people seen as innocents. And death by remote control can be perceived as callous, prompting a backlash.

While recently in Yemen, Swift talked with a number of tribal leaders about the unmanned system attack that killed terrorist provocateur Anwar al-Aulaqi.

“They were more concerned about the drone strike on his 16-year-old son,” says Swift, “because they saw him as a minor, rather than as a militant, and there was some sympathy for him” — even though Swift says many of the same people thought the boy’s father got what he deserved.

Some civil liberties groups challenge the legality of both targeted and signature strikes. But Swift says he believes that “international law is not a restraint on our ability to do it. It’s a series of guidelines that tell us the things we should avoid in order to do these kinds of operations better.”

A key aspect of better, says Swift, is ensuring that remote engagement is always paired with human contact.

“You can’t get to the human dimension of managing these political and social relationships at a local level,” he says, “and understanding how local people see their own security issues if we’re just fighting these wars using drones, if we’re fighting from over the horizon.”

Not everyone acquiring unmanned craft will be concerned about tactical nuance. Reports in early October, for instance, indicated that Hezbollah fighters may have begun using an unmanned surveillance craft — flying it over sensitive sites in Israel.

Who is selling to customers on U. S. and Israeli “no sale” lists? China is in the game.

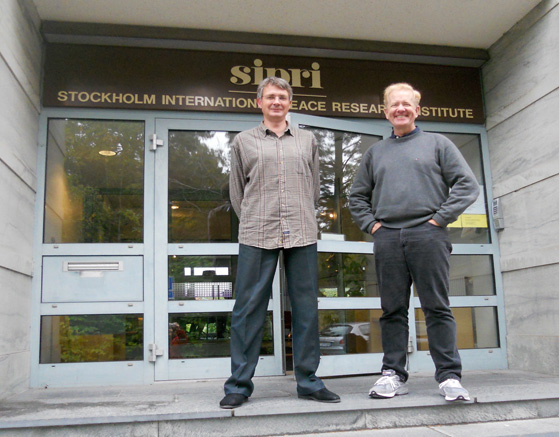

“They have imported, and actually stolen, a lot from Russia,” says Siemon Wezeman, who researches proliferation of unmanned systems at the Stockholm International Peace Research Institute. “They are now really on the way of developing technology which is getting on par with what you would expect from Western European countries.”

In Sweden, Chas visited with Siemon Wezeman of the Stockholm International Peace Research Institute. Wezeman had created a comprehensive list of where military-related drone technology was being used around the world, and how.

And Wezeman says more and more nations and groups are shopping for the technology.

“You see in the last few years even poor and underdeveloped countries in Africa getting involved in acquiring them, and in some cases even thinking about producing them.”

According to Wezeman, the majority of presently-available unmanned aerial vehicles (UAVs) are the sort used for surveillance. “Most of them still are unarmed. There are very few armed UAVs in service. But the development is in the direction of armed UAVs.”

In some ways, remote controlled war could prove a more effective tactic for small groups of bad guys, says National War College Professor Mike Mazarr — offering personal opinions on the topic, not necessarily those of the Defense Department.

“I think very often the U. S. is going to be trying to use them to achieve big national-level goals that are very challenging and difficult,” says Mazarr. “And other actors are going to be trying to achieve much more limited, discrete goals — to keep us from doing certain things.”

The use of any robots scares some people who worry about machines making potentially disastrous mistakes. Advocates of the technology offer the reminder that to err is human.

“Who makes more mistakes: humans or machines?” asks Byman. “The answer, of course, is: it depends. But often machines can avoid mistakes that humans would otherwise make.”

“It may take a human to do a final check on an engine, or turning the last centimeters on a screw,” says Dean Cheng, an analyst at the Heritage Foundation, “but getting the screws to that mechanic could well become a robotic function. And it would be faster, and probably more accurate.”

Robotic accuracy could bring improved safety to even manned aircraft when it comes to taking off and landing.

Retired Rear Admiral Bill Shannon, who until recently oversaw unmanned aircraft initiatives in the U. S. Navy, says, when onboard robotic systems interact with GPS and other sensor data, planes automatically “know their geodetic position over the ground. They land with precision, repeatable precision, regardless of reference to the visual horizon.”

Cummings adds that the U. S. Air Force, at first, insisted that human operators control the take-offs and landings of its remote aircraft. They turned out to be more accident-prone than robotic systems. “From Day One,” she notes, “all the Army’s UAVs had auto land and take-off capability. And as a consequence they haven’t lost nearly as many due to human error in these areas.”

Still, after watching failures in some other supposedly smart systems — automated trading software on Wall Street, for instance — many say they fear movement toward unmanned systems that think for themselves.

“If you optimize [these systems] to work very quickly,” says Byman, “to try to take shots that we’d otherwise miss — you’ll make more mistakes. If you optimize them to be very careful, you’ll miss opportunities. So there are going to be costs either way.”

The U. S. Army is funding research at Georgia Tech into whether it is possible to create an “artificial conscience” that could be installed in robots operating independently on a battlefield.

“There’s nothing in artificial intelligence or robotics that could discriminate between a combatant and a civilian,” says Noel Sharkey, a professor at the University of Sheffield, in the UK. “It would be impossible to tell the difference between a little girl pointing an ice cream at a robot, or someone pointing a rifle at it.”

“As you begin to consider the application of lethal force,” Dempsey adds, “I think you have to pause, and understand how to keep the man in the loop in those systems.”

So what if a battlefield robot does goes haywire. Who is responsible?

“How do you do legal accountability when you don’t have someone in the machine?” worries Singer. “Or what about when it’s not the human that’s making the mistake, but you have a software glitch? Who do you hold responsible for these incidents of ‘unmanned slaughter,’ so to speak?”

“It could be the commander who sent if off,” speculates Sharkey. “It could be the manufacturer, it could be the programmer who programmed the mission. The robot could take a bullet in its computer and go berserk. So there’s no way of really determining who’s accountable, and that’s very important for the laws of war.”

That is why Cummings thinks we will not soon see the fielding of lethal autonomous systems. “Wherever you require knowledge,” she observes, “decisions being made that require a judgment, require the use of experience – computers are not good at that, and will likely not be good at that for a long time.”

Those who chafe at what they call a lack of imagination in the use of robots, though, say that should not stop or slow the integration of such systems in areas where they can do better than humans.

“There are some generals who assume that the role of robots is to help the human being that they assume is still going to be there,” says Ramo. “We’re talking about warfare being changed so that you should quit thinking about the soldier. He shouldn’t be there in the first place.”

Too, say critics, robots should not necessarily look like people — pointing to a robot being created to fight fires onboard Navy ships. It walks around on two legs, about the height of a sailor carrying a fire hose.

Shannon says problems sometime result when people who built manned systems try to create something similar, just minus the human. He encountered the phenomenon with designers determining what visual information would be available to those piloting unmanned aircraft from the ground.

“They don’t need to give the operator the pilot’s view,” he says. “They can give them, for example, a God’s-eye view of the air vehicle and the sensors interacting with the environment — as opposed to a very, very narrow view of what a pilot might see as they look out their windscreen.”

Shannon says he would frequently look for innovative design ideas from people not tied to systems built around human pilots. “Often I see it when I get someone who’s come from outside of aviation,” he says — someone with experience “for example, creating that environment in the gaming industry.”

The brave new world of robot wars could well require the nation to field a new type of warrior, as well.

“The person who is physically capable and mentally capable of engaging in high-risk dogfights,” notes Byman, “may be very different from the person who is a very good drone pilot.”

Cummings anticipates some in the military will find it difficult to accept such a shift. “Fundamentally, it raises that question about value of self,” she says. “’If that computer can do it, what does that make me?'”

In the end, robots thrown into war efforts are put there for one reason: to win. Would it be possible to win a war by remote control?

“You could put together an elaborate strategy,” muses Mazarr, “that would affect the society, the economy, the national willpower of a country that, I could certainly imagine — depending on what was at stake, the legitimacy of its government, a variety of other things — of absolutely winning a war in these ways.”

The nation’s top military officer is not so sure. “It’s almost inconceivable to me,” says Dempsey, “that we would ever be able to wage war remotely. And I’m not sure we should aspire to that. There are some ethical issues there, I think.”

Another ethical consideration is raised by those who worry that remote engagement seems “bloodless” to those employing it.

“It always creates the risk that you’ll use it too quickly,” notes Byman. “Because it’s relatively low cost, and relatively low risk from an American point of view, [it’s possible] that you’ll be likely to use it before thinking it through. Use it even though some of the long term consequences might be negative.”

“You could increasingly be in a world where states are constantly attacking each other,” suggests Mazarr — “in effect, in ways that some people brush off and say, ‘well, that’s just economic warfare,’ or ‘it’s just harassment,’ but others increasingly see as actually a form of conflict.”

Finally, it is worth noting that the sensor information, so important to controlling unmanned systems, flows through data networks — webs susceptible, at least in theory, to being hacked.

“When you’re in the creation of the partnership of human beings and robots, you’re into cyber warfare,” says Ramo, “and you’ve got to be better than your enemies at that, or your robotic operations will not do you very much good.”

Susceptibility to being attacked with remote systems leads Mazarr to ask if the U. S. — with its highly interlinked, interdependent economy — might do better to try to limit the use of remote controlled systems, rather than expanding their use.

“Given the likely proliferation of these kind of things to more and more actors,” he says, “given the vulnerability of the U. S. homeland, given the difficulty we have as a society in taking the actions necessary to make ourselves resilient against these kind of attacks — would it be better to move in the direction of an international regime to control, or limit, or eliminate the use of some of these things?”

Jody Williams thinks so. In 1997 she was awarded the Nobel Peace Prize for a campaign that created an anti-landmine treaty. “I know we can do the same thing with killer robots,” says Williams. “I know we can stop them before they ever hit the battlefield.” She’s working with the group Human Rights Watch in an effort to do so.